Introduction

Artificial intelligence is embedded in core business processes—from customer experience to supply chain optimization. As adoption accelerates, the wrong AI vendor choice doesn’t merely overshoot budget—it creates strategic liabilities.

This article is written for CIO, CDO, and digital/AI leaders inside enterprises—not for vendors. It focuses on the hidden costs of rework, lock-in, change fatigue, and compliance exposure—and how to quantify them to make board conversations and RFPs more concrete.

For a pre-mortem on typical failure modes, see our article on four classic pitfalls in AI vendor selection. For the structured evaluation model and scorecard, see our six-dimension AI vendor evaluation framework.

The Visible Costs: When ‘Wrong’ Is Just Expensive

The immediate damage shows up on the balance sheet—yet is often underestimated.

• Pilot spend that doesn’t convert to production value.

• Integration work that becomes an ongoing tax on IT and ops.

• Pricing and terms that escalate as usage grows.

These costs are only the starting point; the deeper risks follow.

The Hidden Costs: When 'Wrong' Becomes Strategic Failure

Credibility erosion: Failed AI initiatives weaken leadership's standing with boards and peers, making future investments harder to win.

Customer trust loss: Poor AI performance in customer-facing flows harms brand perception faster than it was built.

Opportunity cost: Delays compound while competitors scale AI, widening efficiency and innovation gaps.

Compliance and regulatory exposure: Weak governance invites privacy, bias, and safety failures with associated fines and reputational damage.

Innovation stall through lock-in: Closed ecosystems and inflexible agreements slow adoption of better models and architectures.

How to Quantify the Hidden Costs

To make this concrete for boards and RFPs, quantify each category along three axes: direct cost, indirect cost, and risk-adjusted exposure.

• **Rework and delivery drag**: Estimate extra sprints/FTE months caused by rework and integration issues. Indirect: delayed time-to-value (e.g., 3–6 months lost).

• **Lock-in and switching cost**: Estimate migration cost (data export, rebuilding integrations, retraining users). Risk-adjusted: opportunity cost of staying locked-in (e.g., 20–50% premium on future deals).

• **Change fatigue and credibility loss**: Approximate cost of delayed initiatives, abandoned projects, and reduced adoption. Indirect: weakened executive credibility impacting future funding.

• **Compliance and regulatory exposure**: Reference potential fine ranges (e.g., EU AI Act fines up to €35M), remediation costs, and incident response overhead. Risk-adjusted: reputational damage multiplier.

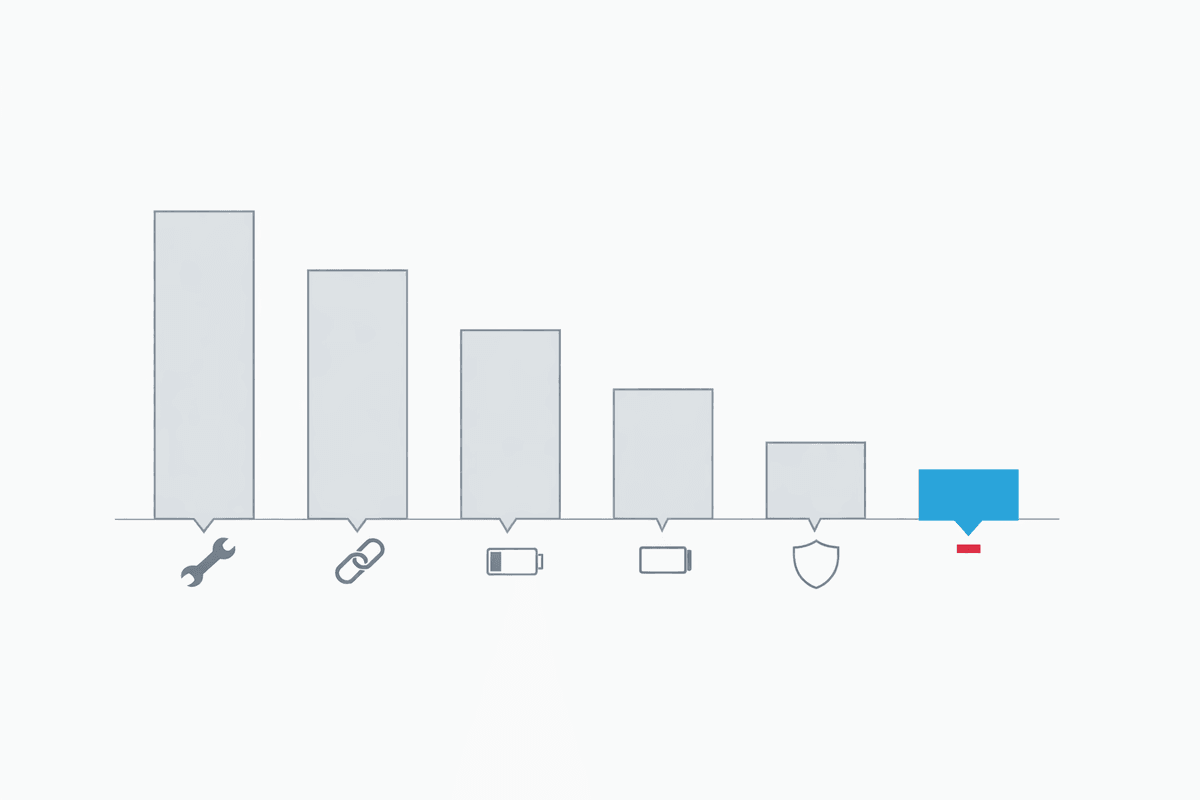

On a single slide for the board, show: 3–5 labeled bars of hidden cost categories with estimated ranges, decision options (go/no-go/renegotiate), and residual risk if you proceed.

Why These Costs Compound Over Time

• Sunk costs encourage bad stickiness and renewal-by-default.

• Workarounds spawn shadow IT and expand your attack surface.

• Public and regulatory scrutiny intensifies once issues surface.

A contained misstep can become an enterprise-wide drag if left unaddressed.

How to Use This Article With the Framework

Use the impact categories here to align executives on stakes and risk appetite. Then apply the evaluation framework to mitigate them:

• To prevent pilot churn and fragile builds, see the Six Dimensions (Technical Depth, Scalability).

• To reduce integration tax, see Integration Ease.

• To contain compliance risk, see Security & Compliance.

• To preserve agility, see Flexibility (portability, interoperability, exit support).

For a concise pre-mortem on what typically goes wrong, see the Pitfalls article.

Practical Safeguards for Leaders

• Anchor pilots to production SLOs and business KPIs.

• Demand evidence: security artifacts, references, performance data under realistic load.

• Bake in exit and portability (contractual and technical) at the outset.

• Govern AI like any critical system: risk owners, controls, auditability.

• Use this as a working session with your internal team and existing advisors; it's not a substitute for due diligence on vendors pitching to you.

For full criteria and a weighted scorecard, use the Six Dimensions framework.

Conclusion

The cost of a poor AI vendor choice extends far beyond budget. Frame the stakes with this impact lens, pressure-test vendors against the Six Dimensions, and use the Pitfalls article to anticipate failure modes before they happen.

Sources

- [01] Gartner — Hype Cycle for Artificial Intelligence (2025 overview)

- [02] Forrester — The Forrester Wave™: AI Services, Q2 2024

- [03] BCG — Recognized as a Leader in AI Services (press release, May 2024)

- [04] Deloitte — State of Generative AI in the Enterprise (2024 year-end)

- [05] McKinsey — The State of AI 2025 (report PDF)

- [06] Harvard JOLT — The Paradox of Data Portability and Lock-In Effects (2023)

- [07] PwC — Responsible AI Toolkit (updated 2024 hub)

- [08] World Economic Forum — AI Governance Alliance (briefing paper series, 2024–2025)

- [09] European Commission — Artificial Intelligence Act (EU AI Act, 2025)